Friday, October 28, 2022

Synopsis:

Many areas of science study and utilize methods of evaluating information, especially in the form of predictions. In particular, this workshop brings together theorists from different fields including machine learning and economics-and-computation. How does each area approach the problem of choosing or designing an evaluation method, such as a loss function or scoring rule? How might evaluation tools developed in one field be useful in others? The workshop aims to foster interdisciplinary discussion on these and related topics.

Speakers:

Rafael Frongillo (Colorado University- Boulder), Yang Liu (University of California- Santa Cruz), Weijie Su (University of Pennsylvania- Wharton), Mallesh Pai ( Rice University)

About the Series

The IDEAL workshop series brings in four experts on topics related to the foundations of data science to present their perspective and research on a common theme. Chicago area researchers with an interest in the foundations of data science.

This workshop is organized by Bo Waggoner (University of Colorado, Boulder), and Zhaoran Wang (Northwestern University).

Logistics

- Date: Friday, October 28, 2022

- In-person: Northwestern University Mudd Library 3514

- Virtual: via Gather

Transportation and parking

- 10:30-11:00: Breakfast

- 11:00-11:05: Opening Remarks

- 11:05-11:45: Mallesh Pai The Wisdom of the Crowd and Higher-Order Beliefs Video

- 11:50-12:30: Weijie Su A Truthful Owner-Assisted Scoring Mechanism Video Video

- 12:35-2:00: Lunch Break

- 2:00 – 2:40: Yang Liu Learning from Noisy Labels without Knowing Noise Rates

- 2:45 – 3:25:Rafael Frongillo Elicitation in Economics and Machine Learning

- 3:30 – 4:00: Coffee Break

- 4:00 – 5:30: Individual/group meetings with students, faculty, postdoc attendees

Titles and Abstracts

Speaker: Mallesh Pai

Title: The Wisdom of the Crowd and Higher-Order Beliefs

Abstract: The classic wisdom-of-the-crowd

(joint work with Yi-Chun Chen and Manuel Mueller-Frank).

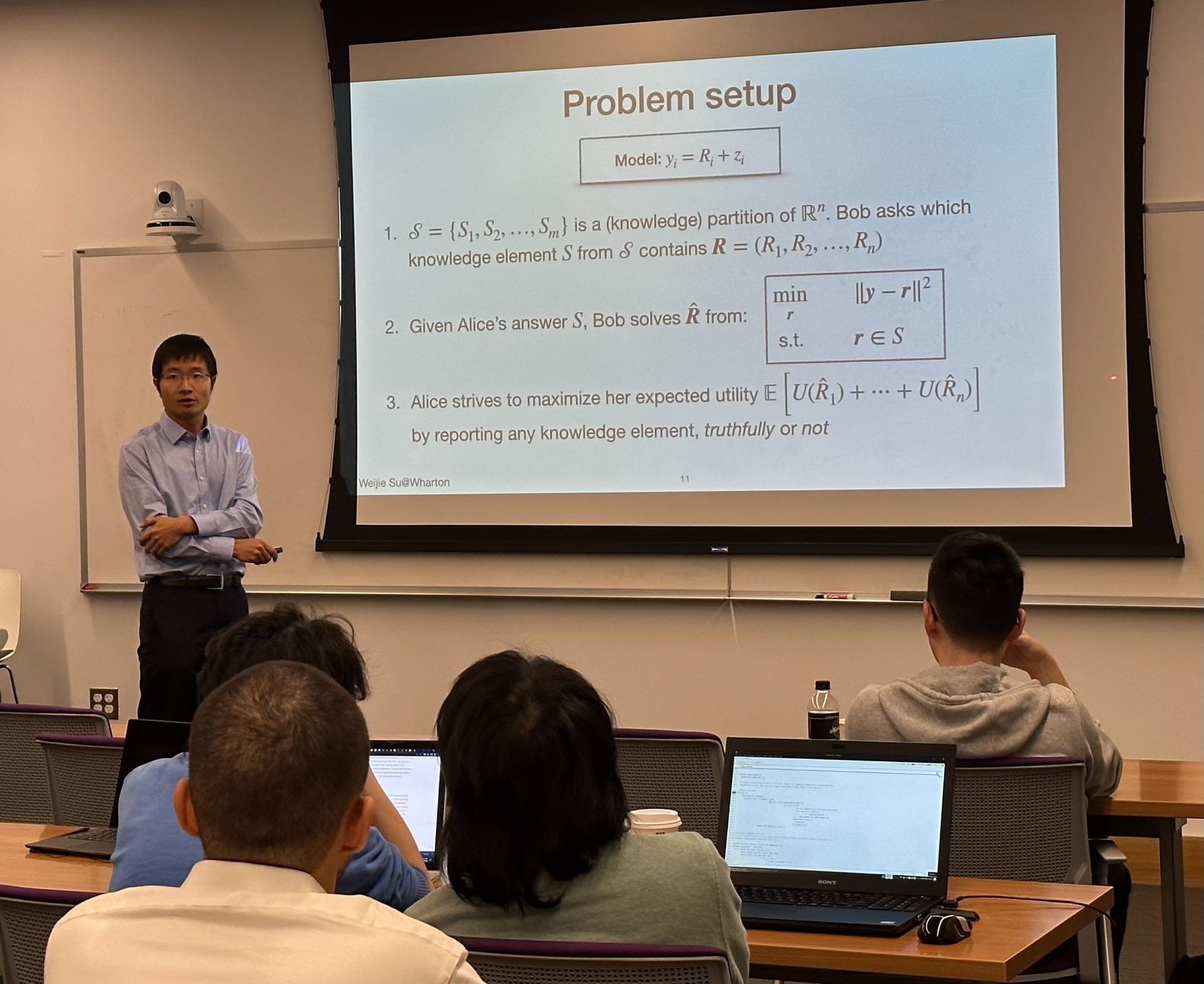

Speaker: Weijie Su

Title: A Truthful Owner-Assisted Scoring Mechanism

Abstract: Alice submits a large number of papers to a machine learning conference and knows about the ground-truth quality of her papers; Given noisy ratings provided by independent reviewers, can Bob obtain accurate estimates of the ground-truth quality of the papers by asking Alice a question about the ground truth? First, if Alice would truthfully answer the question because by doing so her payoff as additive convex utility over all her papers is maximized, we show that the questions must be formulated as pairwise comparisons between her papers. Moreover, if Alice is required to provide a ranking of her papers, which is the most fine-grained question via pairwise comparisons, we prove that she would be truth-telling. By incorporating the ground-truth ranking, we show that Bob can obtain an estimator with the optimal squared error in certain regimes based on any possible ways of truthful information elicitation. Moreover, the estimated ratings are substantially more accurate than the raw ratings when the number of papers is large and the raw ratings are very noisy. Finally, we conclude the talk with several extensions and some refinements for practical considerations. This is based on arXiv:2206.08149 and arXiv:2110.14802.

Speaker: Yang Liu

Title: Learning from Noisy Labels without Knowing Noise Rates

Abstract: Learning with noisy labels is a prevalent challenge in machine learning: in supervised learning, the training labels are often solicited from human annotators, which encode human-level mistakes; in semi-supervised learning, the artificially supervised pseudo labels are immediately imperfect. The list goes on. Existing approaches with theoretical guarantees often require practitioners to specify a set of parameters controlling the severity of label noises in the problem. The specifications are either assumed to be given or estimated using additional approaches

In this talk, I introduce peer loss functions, which enable learning from noisy labels and do not require a priori specification of the noise rates. Peer loss functions associate each training sample with a specific form of “peer” sample, which helps evaluate a classifier’s predictions jointly. We show that, under mild conditions, performing empirical risk minimization (ERM) with peer loss functions on the noisy dataset leads to the optimal or a near-optimal classifier as if performing ERM over the clean training data. Peer loss provides a way to simplify model development when facing potentially noisy training labels. I will also discuss extensions of peer loss and some emerging challenges concerning biased data.

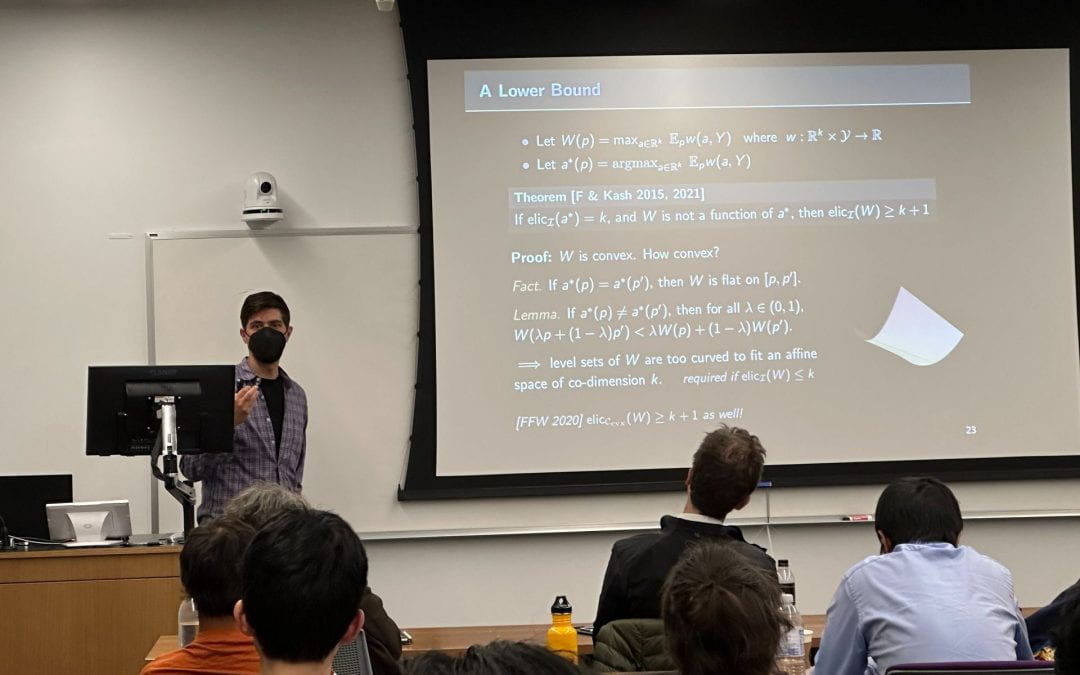

Speaker: Rafael Frongillo

Title: Elicitation in Economics and Machine Learning

Abstract: Loss functions are a core component of machine learning algorithms, as they provide the algorithm with a measure of prediction error to minimize. Loss functions are also a core component of mechanisms to elicit information from strategic agents, for essentially the same underlying reason. This talk will begin with an overview of how the design of loss functions connects to these and related settings. We will then address an observation which has spurred much of the recent research in machine learning, statistics, and finance in this area: some statistics are not elicitable directly, or in the case of machine learning, are intractable to elicit directly. Prominent examples include risk measures in statistics and finance, and nearly every classification-like learning task. In all of these settings, we instead turn to indirect elicitation, where one elicits an alternative statistic from which one can derive the desired statistic. Indirect elicitation leads naturally to the concept of elicitation complexity: the number of dimensions required for this alternative statistic. We will give tools to prove upper and lower bounds on elicitation complexity, with a few applications, and conclude with several interesting open problems.