Friday, October 14, 2022

Synopsis:

Incentives for information procurement are integral to a wide range of applications including peer grading, peer review, prediction markets, crowd-sourcing, or conferring scientific credit. Meanwhile, mechanisms for information procurement have made large theoretical advances in recent years. This workshop will draw together practitioners that have deployed solutions in this space and experts in incentives and mechanisms to talk about existing connections, look for unexploited connections, and develop the next generation of information procurement research that will allow the theory to be further applied in these areas.

The workshop is part of the IDEAL Fall 2022 Special Quarter on Data Economics.

Speakers:

Kevin Leyton-Brown (Univ. of British Columbia), Raul Castro Fernandez (UChicago), Grant Schoenebeck (Univ. of Michigan) , and Nihar Shah (Carnegie Mellon Univ.).

About the Series

The IDEAL workshop series brings in experts on topics related to the foundations of data science to present their perspective and research on a common theme. Chicago area researchers with an interest in the foundations of data science.

This workshop is organized by Jason Hartline (Northwestern University), Ian Kash (University of Illinois, Chicago), and Grant Schoenebeck (University of Michigan)

Logistics

- Date: Friday, October 14, 2022

- In-person: Northwestern University, Mudd Library 3514

- Virtual: via Gather

Transportation and parking

Schedule (tentative)

- 10:30-11:00: Breakfast

- 11:00-11:05: Opening Remarks

- 11:05-11:45: Grant Schoenebeck Peer prediction, Measurement Integrity, and the Pairing mechanism (Video)

- 11:50-12:30: Raul Castro Fernandez Protecting Data Markets from Strategic Buyers

- 12:35-2:00: Lunch Break

- 2:00 – 2:40: Nihar Shah Peer review, Incentives, and Open Problems (Video)

- 2:45 – 3:25: Kevin Leyton-Brown Better Peer Review via AI (Video)

- 3:30 – 4:00: Coffee Break

- 4:00 – 5:30: Individual/group meetings with students, faculty, postdoc attendees

Titles and Abstracts

Speaker: Grant Schoenebeck

Title: Peer prediction, Measurement Integrity, and the Pairing mechanism

Abstract: Peer Prediction is a theoretical technique to reward agents for producing high quality information without the ability directly verify the quality of the reports. While a handful of mechanisms have been proposed and such a technique promises to be useful in a variety of contexts such as peer review, peer grading, and crowd-sourcing, these mechanisms have not yet seen wide use.

First, I will propose measurement integrity, a property related to ex post reward fairness, as a novel desideratum for peer prediction mechanisms in many natural applications. Like robustness against strategic reporting, the property that has been the primary focus of the peer prediction literature, measurement integrity is an important consideration for understanding the practical performance of peer prediction mechanisms.

Second, I will present the pairing mechanism, a provably incentive compatible peer prediction mechanism that can leverage machine learning forecasts for an agent’s report (given the other agents’ reports) to work in a large variety of settings. The key theoretical technique is a variational interpretation of mutual information, which permits machine learning to estimate mutual information using only a few samples (and likely has other applications in machine learning even beyond strategic settings).

Finally, we will show empirically how augmenting the pairing mechanism with simple machine learning techniques can substantially improve upon the measurement integrity of other peer prediction mechanisms.

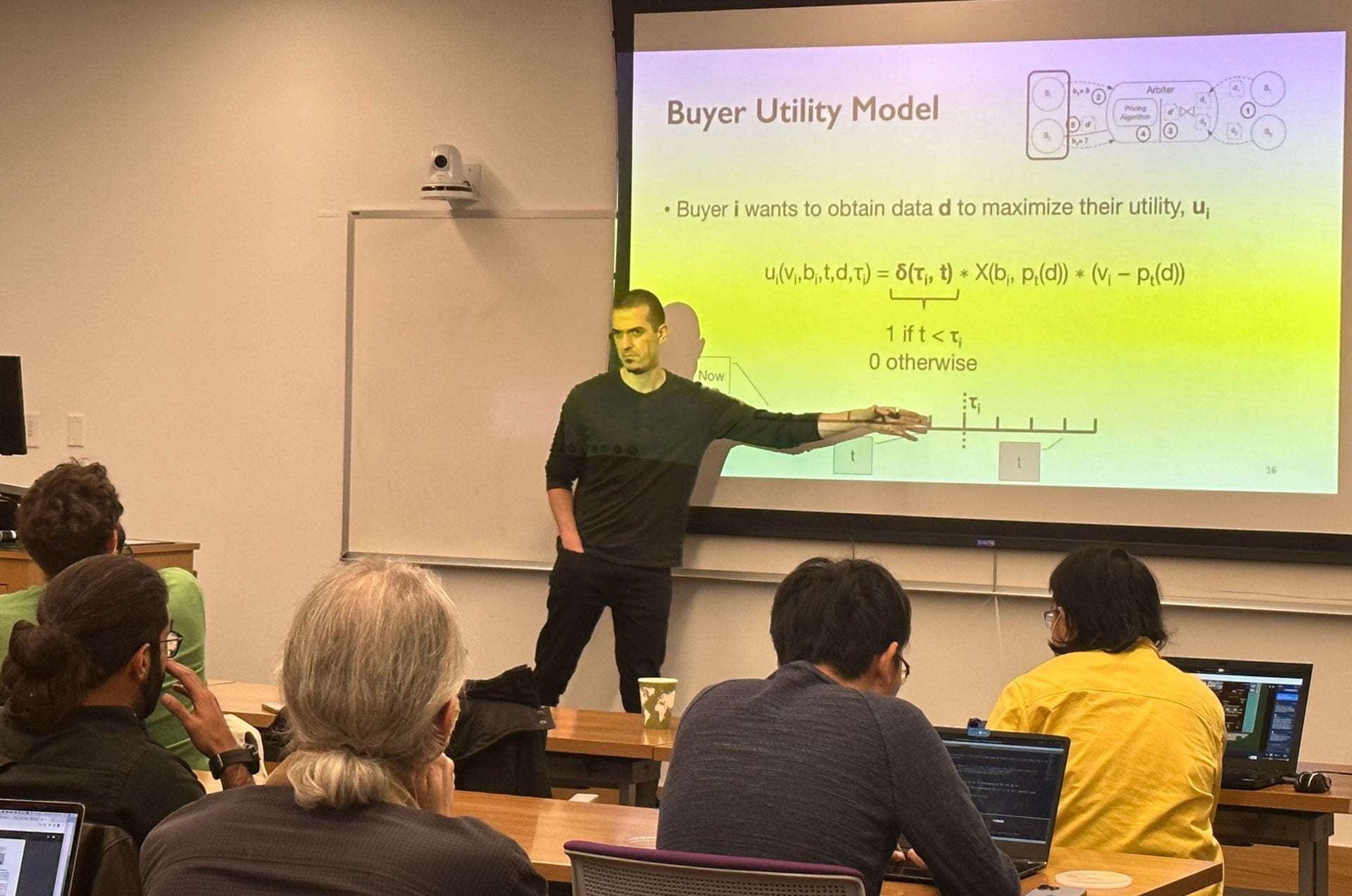

Speaker: Raul Castro Fernandez

Title: Protecting Data Markets from Strategic Buyers

Abstract: The growing adoption of data analytics platforms and machine learning-based solutions for decision-makers creates a significant demand for datasets, which explains the appearance of data markets. In a well-functioning data market, sellers share data in exchange for money, and buyers pay for datasets that help them solve problems. The market raises sufficient money to compensate sellers and incentivize them to keep sharing datasets. This low-friction matching of sellers and buyers distributes the value of data among participants. But designing online data markets is challenging because they must account for the strategic behavior of participants.

In this talk, I will motivate one type of data market and then introduce techniques to protect it from strategic participants. I combine the protection techniques into a pricing algorithm designed for trading data based on elicitation of buyers’ private valuation. The talk will emphasize the use of empirical methods to motivate and validate analytical designs, and it will include the description of user studies and extensive simulations. I will summarize some questions and opportunities at the intersection of empirical methods and data market design.

Speaker: Nihar Shah

Title: Peer review, Incentives, and Open Problems

Abstract: I will talk about incentives in peer review, with a focus on open problems to stimulate discussions in the workshop. I will consider two types of incentive settings: (i) Benign, where the goal is to incentivize quantity and quality of reviews, and (ii) Malicious, where participants employ dishonest means. The talk will cover literature from this survey of challenges, experiments, and solutions in peer review: https://www.cs.cmu.edu/~

Speaker: Kevin Leyton-Brown

Title: Better Peer Review via AI

Abstract: Peer review is widespread across academia, ranging from publication review at conferences to peer grading in undergrad classes. Some key challenges that span such settings include operating on tight timelines using limited resources, directing scarce reviewing resources in a way that maximizes the value they provide, and providing incentives that encourage high-quality reviews and discourage harmful behavior. This talk will discuss two large-scale, fielded peer review systems, each of which was enabled by a variety of AI techniques.

First, I will describe and evaluate a novel reviewer-paper matching approach that was deployed in the 35th AAAI Conference on Artificial Intelligence (AAAI 2021) and has since been adopted (wholly or partially) by other conferences including ICML 2022, AAAI 2022, IJCAI 2022, AAAI 2023 and ACM EC 2023. This approach has three main elements: (1) collecting and processing input data to identify problematic matches and generate reviewer-paper scores; (2) formulating and solving an optimization problem to find good reviewer-paper matchings; and (3) employing a two-phase reviewing process that shifts reviewing resources away from papers likely to be rejected and towards papers closer to the decision boundary. Our evaluation of these innovations is based on an extensive post-hoc analysis on real data.