Tuesday, February 28, 2023

Synopsis

This workshop is meant to introduce researchers to problems involving both the field of machine learning and the field of logic. The workshop will begin with introductions to the two areas, followed by a research talk at their intersection.

About the Series

The IDEAL workshop series brings in experts on topics related to machine learning and logic to present their perspective and research on a common theme. The workshop is part of the IDEAL Winter/Spring 2023 Special Quarter on Machine Learning and Logic.

This workshop is organized by James Freitag (University of Illinois at Chicago),and Lev Reyzin (University of Illinois at Chicago).

Logistics

- Date: Tuesday, February 28, 2023

- In-person: Northwestern University, Mudd Library room 3514

- Registration: click here to register

Transportation and parking

Our North Campus Parking garage is near the Computer Science department in Mudd Hall (Third Floor 3514). Visitor’s parking is $9 a day and you can get a ticket from the ticket booth when you drive in and be sure to pay inside the first floor of the garage in order to obtain a ticket for exiting the garage.

Schedule (tentative)

10:30 AM -10:50 AM Coffee

10:50 AM – 11:00 AM Opening Remarks

11:00 AM – 12:00 PM Lev Reyzin Notions of Learnability and their Corresponding Dimensions (Video, Slides)

12:00 PM – 1:30 PM Lunch

1:30 PM – 2:30 PM James Freitag Littlestone dimension in model theory and learning theory (Video)

2:30 PM – 3:00 PM Coffee Break

3:00 PM – 4:00 PM Maryanthe Malliaris The unstable formula theorem revisited via algorithms

4:00 PM – 5:30 PM Open Problem Session/Group Meeting with Attendees

Titles and Abstracts

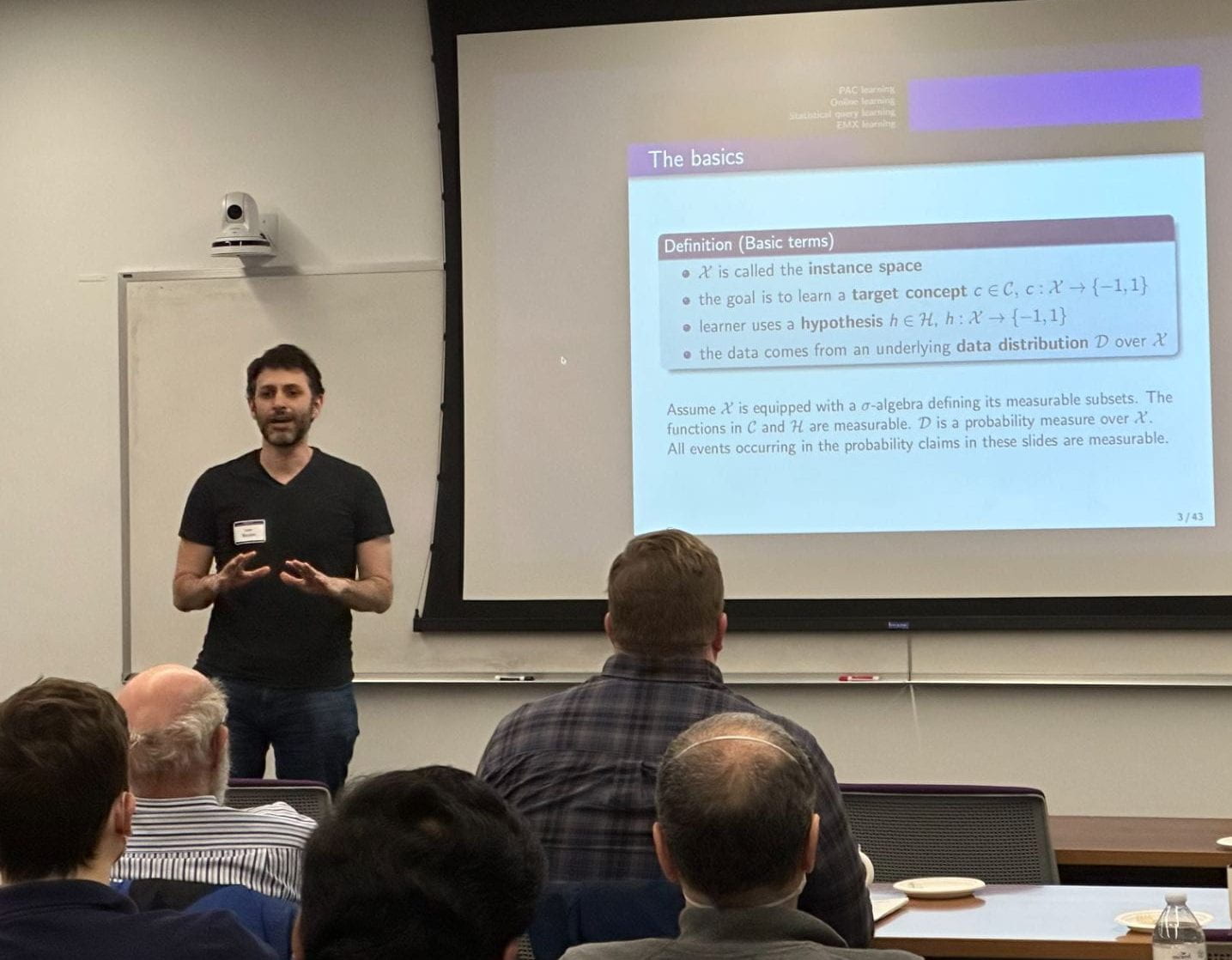

Speaker: Lev Reyzin

Title: Notions of Learnability and their Corresponding Dimensions

Abstract: In this talk, I will give an overview of computational learning theory by introducing some of its foundational notions of learnability, including PAC, online learning, and statistical queries. I will also present the relationship of these learning models to different notions of dimension, which include VC, Littlestone, and SQ.

Speaker: James Freitag

Title: Littlestone dimension in model theory and learning theory

Abstract: First, we discuss Littlestone dimension and its origins in model theory (first order formulas, semialgebraic sets, etc.). Following this, I am planning to give an interesting technical argument which connects trees and half-graphs via a Ramsey type argument. This argument will be related to Littlestone dimension and its dual. The argument shows that in an infinite graph, there is a bound on the size of a half-graph if and only if there is a bound on the size of a Littlestone tree. In the finitary case, the relationship is more complicated and garnered less interest, since there is a logarithmic gap in the sizes of the half-graph and tree using currently known arguments. Whether these bounds can be improved in general or in special cases is an interesting motivational question for problems in both model theory and learning theory.

Speaker: Maryanthe Malliaris

Title: The unstable formula theorem revisited via algorithms

Abstract: We first prove that Littlestone classes, those which model theorists call stable, characterize learnability in a new statistical model: a learner in this new setting outputs the same hypothesis, up to measure zero, with probability one, after a uniformly bounded number of revisions. This fills a certain gap in the literature, and sets the stage for an approximation theorem characterizing Littlestone classes in terms of a range of learning models, by analogy to definability of types in model theory. We then give a complete analogue of Shelah’s celebrated (and perhaps a priori untranslatable) Unstable Formula Theorem in the learning setting, with algorithmic arguments taking the place of the infinite. Joint work with S. Moran (see arXiv:2212.05050).